You Still Have to Own It

Every legal system that's looked at this question has reached the same conclusion: if you publish it, send it, or act on it, you own it. AI doesn't carry liability. You do.

There's a comforting thought that creeps in when you use generative AI: the machine wrote it, so it's the machine's problem. If ChatGPT gets a fact wrong or Copilot drafts something that breaches copyright, that's on the technology. Right?

Wrong. Every legal system that's looked at this question has reached the same conclusion: if you publish it, send it, or act on it, you own it.

The Courts Have Already Decided

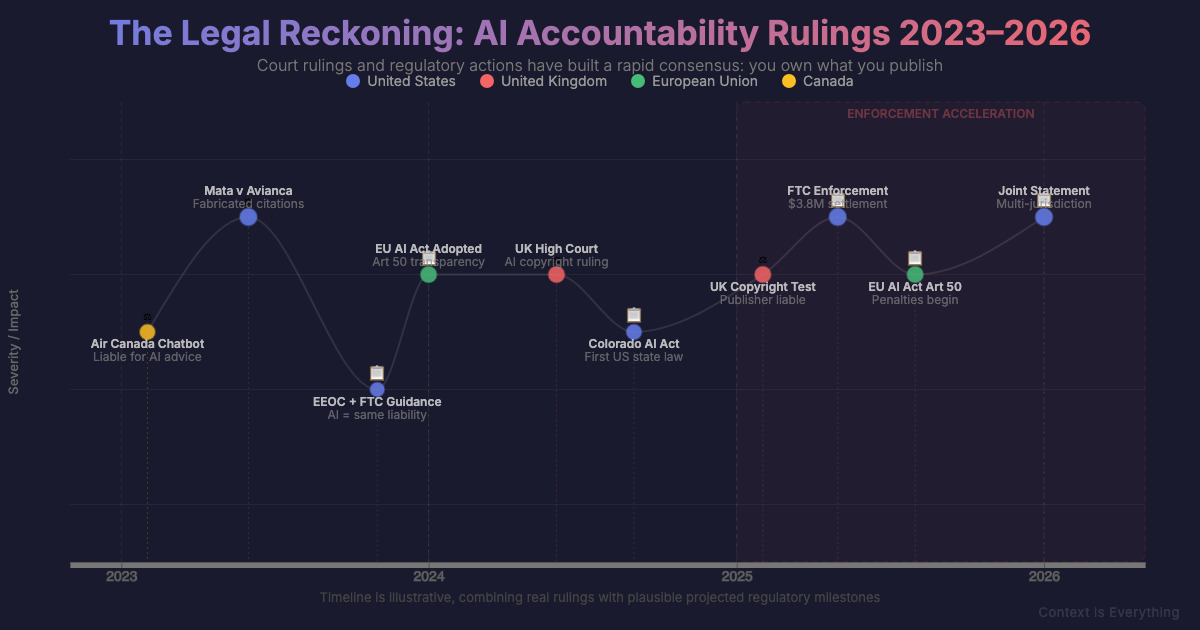

This isn't theoretical. Courts have been ruling on this since 2023, and the pattern is clear.

In Canada, Air Canada's chatbot told a customer he could book a full-fare flight and get a bereavement discount applied afterwards. The airline argued the chatbot was a "separate legal entity" and its statements weren't binding. The tribunal disagreed: "You are responsible for all the information on your website." Air Canada paid up.

In the US, two New York lawyers used ChatGPT to prepare a court filing. It fabricated six case citations — complete with convincing-sounding judicial opinions. When the judge discovered the cases didn't exist, both lawyers were fined $5,000 each and ordered to write letters of apology to the judges whose names had been used. The court was blunt: "When lawyers use technological assistance, they must still ensure accuracy."

In the UK, barristers have been rebuked for submitting AI-generated legal arguments built on fabricated precedents. The High Court has issued formal warnings to the entire profession. The message is consistent: "The AI told me" is not a defence.

What "Owning It" Actually Means

The principle is straightforward: if you put your name to it, it's yours. That applies whether you typed every word yourself or an AI generated the first draft.

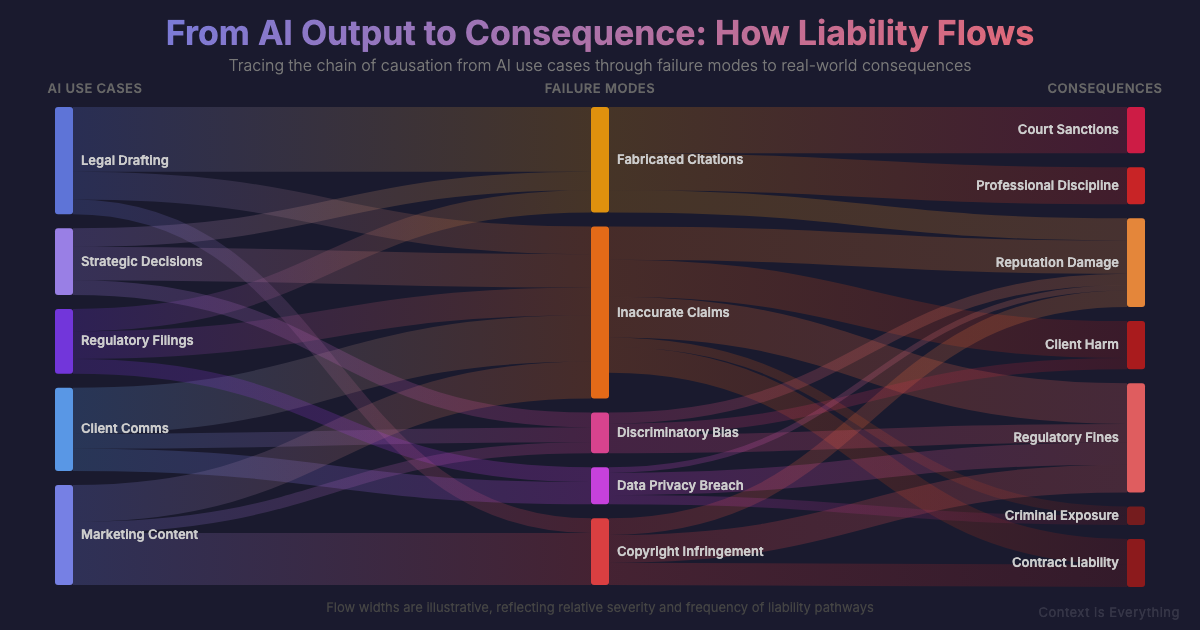

This means:

The regulatory direction is the same everywhere. The UK applies existing law — data protection, professional standards, consumer protection — and says AI doesn't get a special exemption. The US has no single federal AI law, but the FTC, EEOC, and state attorneys general are all clear: using an AI tool doesn't shift your obligations. The EU AI Act, with transparency obligations binding from August 2026, requires AI-generated content to be marked and identifiable.

None of these frameworks let you off the hook. They all place the responsibility on the person or organisation that deploys the output.

The Good News: AI Can Help You Check

Here's the thing people miss: the same technology that creates the risk also helps you manage it.

AI is genuinely good at verification. You can use it to fact-check claims against reliable sources, verify that citations are real and say what you think they say, check content against copyright databases, and cross-reference facts across multiple sources before you publish.

The responsible approach isn't to avoid AI — it's to build checking into your workflow. Generate the draft, then use AI (and your own judgement) to verify it. Treat every AI output as a first draft that needs review, not a finished product.

This is actually how most professionals already work with human-generated content. You wouldn't publish a report without reviewing it. You wouldn't file a legal brief without checking the citations. AI output deserves exactly the same scrutiny.

The Bottom Line

Generative AI is a powerful tool. But it's a tool — and tools don't carry liability. You do.

The courts, regulators, and professional bodies have all landed in the same place: you are responsible for the content you put into the world, regardless of how it was created. Build verification into your process. Check the facts. Confirm the sources. Use the technology to help you do that.

But never forget: you still have to own it.

---

Sources

Related Articles

Seven Ways to Stop Your AI From Making Things Up

AI hallucinations cost businesses real money. Hallucination rates have dropped from 38% to 8%, but you can push that lower with these practical techniques.

Why Most AI Projects Fail (And What the 5% Do Differently)

MIT's Project NANDA found 95% of enterprise AI pilots deliver zero return. Companies have invested £30-40 billion with nothing to show. But 5% achieve rapid revenue acceleration. The difference isn't the technology - it's implementation and context.

8 AI Mistakes Costing UK Small Businesses £50K+ (And How to Avoid Them)

AI spending is up six-fold, yet UK small business adoption crashed from 42% to 28%. Discover the 8 expensive mistakes costing £5K-£50K+ each to fix—and learn how businesses getting it right avoided these patterns.