The Two Moats: Why Consultancies' AI Advantages Are Structural, Not Timing

Most professional services firms are still asking 'should we explore AI?' The firms pulling ahead are already in production. But the advantage isn't timing — it's structural. Two competitive moats are forming that can't be bought, replicated, or rushed: private data and custom tooling.

Most professional services firms are still asking "should we explore AI?" The firms pulling ahead are already in production. But the advantage isn't timing. It's structural.

Two competitive moats are forming in professional services. Neither can be bought, replicated, or rushed.

Moat One: Your Private Data

Public LLMs are trained on public data. Books, articles, filings, published research. That's the ceiling for any generic AI tool.

Expert consultancies hold something different entirely: years of confidential client work. Thousands of engagements. Proprietary patterns in what actually works versus what merely looks good in a case study.

This private corpus is irreplaceable. A financial advisory firm with decades of deal structuring data sees patterns no public model will ever access. An executive assessment firm with thousands of confidential leadership evaluations has calibration data generic AI cannot replicate. A pharmaceutical consultancy with hundreds of regulatory submissions knows what actually determines approval — not what textbooks claim.

When you train AI on this private corpus, the outputs aren't just better. They're categorically different from anything a generic tool produces. Why most AI projects fail isn't about technology — it's about context. And private data is the richest context there is.

Moat Two: Your Custom Tooling

Everyone can subscribe to Claude or GPT-4. Model access is commoditised. That's the baseline, not the differentiator.

The real advantage is what you build on top of these models. Custom workflows designed for your domain. Domain-specific optimisation that generic tools can't match. Retrieval strategies tuned to your professional context. Prompt architectures calibrated against years of outcomes.

Saying "we use GPT-4" is like saying "we use Microsoft Word." It tells you nothing about the sophistication of what's actually being produced.

The consultancies creating genuine AI advantage have been continuously building domain-specific tooling. Each iteration compounds. Each refinement adds to a body of implementation knowledge that competitors starting fresh simply don't have.

Why These Moats Compound

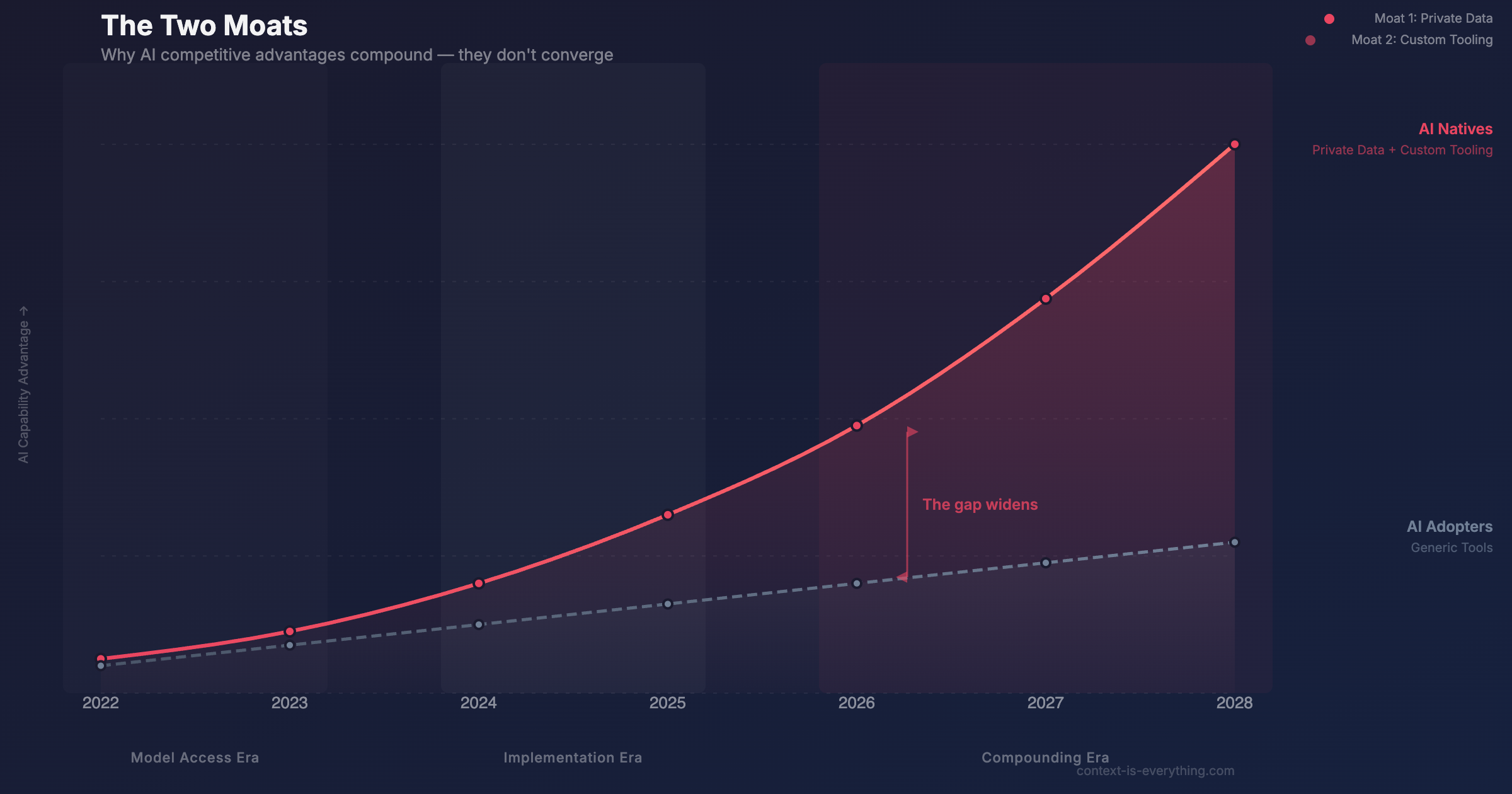

Here's what makes these advantages structural rather than timing-based: they compound as base models improve.

When the next generation of frontier models launches, firms with sophisticated custom tooling see multiplicative gains. Better base capabilities multiplied by deeper domain expertise multiplied by richer private data. Firms using generic tools see incremental gains at best.

The gap widens. It doesn't close.

This is the opposite of what most people assume. The concern is usually: "Won't better models level the playing field?" The reality: better models make private data more valuable, because there's more sophisticated pattern recognition to apply to it. Better models make custom tooling more valuable, because there are more capabilities to optimise for.

What Can't Be Rushed

Neither moat can be purchased or accelerated past a certain point.

Private data accumulates through years of trusted client relationships. There's no shortcut. Custom tooling is refined through continuous production deployment. Reading about it isn't the same as doing it. The talent to maintain both — people who've lived AI integration, not just theorised about it — takes time to develop.

Transformation permanence applies here directly. The consultancies building AI capability as infrastructure rather than rented expertise are creating something that survives personnel changes and market shifts.

The Question for Buyers

If you're selecting a professional services provider, ask two questions:

The professional services industry is dividing into AI natives and AI adopters. The structural moats are already forming. Check where your organisation stands — and on which side you're building.

Related Articles

Why Most AI Projects Fail (And What the 5% Do Differently)

MIT's Project NANDA found 95% of enterprise AI pilots deliver zero return. Companies have invested £30-40 billion with nothing to show. But 5% achieve rapid revenue acceleration. The difference isn't the technology - it's implementation and context.

Why Transformations Fail the Departure Test

If everyone who built this left tomorrow, would it keep working? Most transformations fail the departure test because they're built on people, not infrastructure. Here's the knowledge architecture fix.

Identifying High-ROI Processes for AI Automation

Most people intuitively know which tasks are too complex, too arduous, or too boring for humans alone. We've found high-value processes fall into three categories — and picking one from each is the fastest way to prove AI value.